Featured Posts

Drawing Lessons from Silicon Valley’s 1980s PC Innovation to See Opportunities in AI Hardware

![]() Lauren Pan - Oct 09, 2025

Lauren Pan - Oct 09, 2025

This content originates from a sharing session by IceWhale Technology within FreeS Fund. It aims to review the key transformations, development trends, critical events, and the underlying constant consumer demands of Silicon Valley’s PC industry in the 1980s. The article is quite long, covering the state of chips in the 1980s, the start and penetration of PCs, the changes in DOS and Windows 1.0 systems from 1980-1990, early PC killer apps, and cold start scenarios. We hope you can read it patiently, striving to inspire your investment decisions and product innovations in AI hardware and applications.

Borrowing a quote from Ray Dalio of Bridgewater Associates:

The idea that human history has repeating patterns is just reality. Maybe “cycle” isn’t the right word for this, perhaps it should be a pattern, but I think either describe the process.

—— Ray Dalio

The Rise of the PC, the Process of Informatization, and the Four Key Elements

Computer History Museum, 1980s Silicon Valley

Apple II – 1977

MOS Technology 6502, 8-bit, color, $1200+, 8 expansion slots

Author: Rama

License: CC BY 2.0

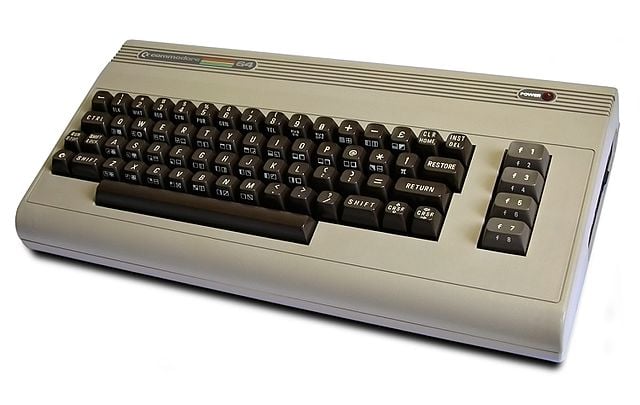

Commodore 64 – 1982

$595 ->$299

Author: Bill Bertram

License: Creative Commons Attribution-Share Alike 2.5

Today, as OpenAI, Google, and Microsoft define the “era of intelligence” based on large models, let’s first go back to the early “information age” built by the birth of the PC in 1976. That was the moment the Apple I was born. This computer was launched by Steve Jobs and Steve Wozniak in a geek community called the Homebrew Computer Club, priced at $600. The release of the Apple I in the club was much like an online crowdfunding project on Kickstarter today. It was aimed only at geeks, required manual assembly of parts, and came as a kit… early sales were only a little over 200 units. But this product laid the foundation for Apple, helping Jobs and his team accumulate their first batch of seed users.

Shortly after, in 1977, Apple released the Apple II. This generation was not only more refined in appearance, adding color display, but also included expansion slots and an integrated case, making it easier for geeks to expand and DIY. However, other core specifications did not change much. The release of the Apple II was a milestone; it was priced at $1250, far below the expensive commercial computers of the time.

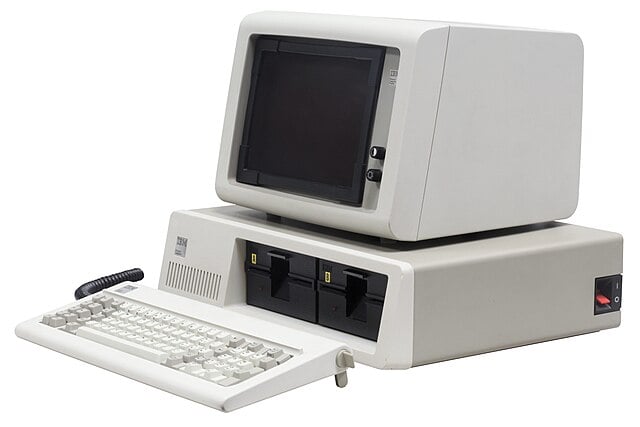

Four years later, IBM, reportedly under market pressure, dispatched a lean team of 12 to launch a project codenamed “Project Chess,” asserting its position as the industry leader. As the leading enterprise, they naturally needed to make a strong statement. They introduced the IBM PC, based on an Intel processor, and adopted an open hardware architecture. This opened the door for other manufacturers to create compatible devices, which in turn promoted the formation of the Wintel ecosystem. IBM’s open strategy quickly led to its PC standard being accepted by the market.

The Commodore 64 in 1982 is another company worth noting, although it didn’t go very far. In its early days, it correctly guessed several key strategies. It offered leading graphics and audio performance at a competitive price of $595, which was well-received. At the same time, Commodore prioritized expanding into the European market, with over half of its revenue coming from Europe. By leveraging local distribution networks and advertising, it quickly gained popularity, laying a solid foundation for its presence in the global home computer market.

Just as today there are numerous sub-channels on Reddit for large models like ChatGPT, LocalLLM, and Stable Diffusion, in the early days of every era, a large number of talented individuals and ideas originated from online and offline communities. This is not so unfamiliar to world today, as many tech giants used to hang out in BBS forums when the internet first arrived, before dispersing into various industries. Today, the community around large models at top Universities has similar attributes.

But what’s more interesting is that such clubs tend to gradually disappear over a decade. The pattern is that when a new category emerges, it attracts a group of enthusiasts who are very active in the community, proposing various ideas and even creating early product prototypes. As large companies intervene and innovation shifts towards commercialization, the early bottom-up, community-born ideas gradually mature and gain substance. However, these communities often have a “destiny”: they are extremely prosperous during periods of active innovation, but their popularity fades as the industry matures and giants emerge. The Homebrew Computer Club, as well as the development of today’s model industry, 3D printing, and quadcopters, all follow this “boom-and-bust” pattern.

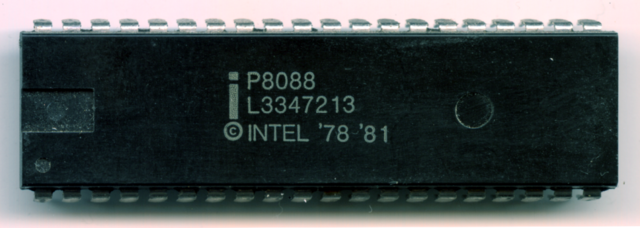

Author: ZyMOS | The Intel 8088 is a classic processor released in 1979, used in the IBM PC.

|

Secondly, let’s look at the chips of that time, which were the foundation for the PC category. The definition of a PC is inseparable from the continuous decrease in chip costs and “just enough” computing power. Being suitable for use and affordable allowed PCs to enter the mass market. The Intel 8088 is a typical example. The 8088 adjusted the bus width compared to its predecessor, the 8086, resulting in lower costs, which allowed it to become the core chip of the IBM PC.

At that time, IBM’s main commercial and military computing equipment was very large and powerful, but it was completely “overkill” for the personal market. The 8088, by contrast, was a step down, offering balanced computing power at a lower cost, much like today’s NAS (Network Attached Storage) devices that simplify commercial servers into a size and computing power suitable for home use, allowing individuals to have their own small computing solutions.

If NVIDIA’s H200 is the commercial leader today, who is developing the ASIC chips that will bring models into various computing terminals like AI PCs or AI NAS?

The Evolution of Systems – Every Generation Touts Its “Friendly User Interface”

Just like how every company today claims to have an “intelligent system”

Author: Vadim Rumyantsev

public domain

Tech Enthusiasts, Small Businesses

Command-line interface

Author: leighklotz

Creative Commons Attribution 2.0 Generic

Enterprise Users

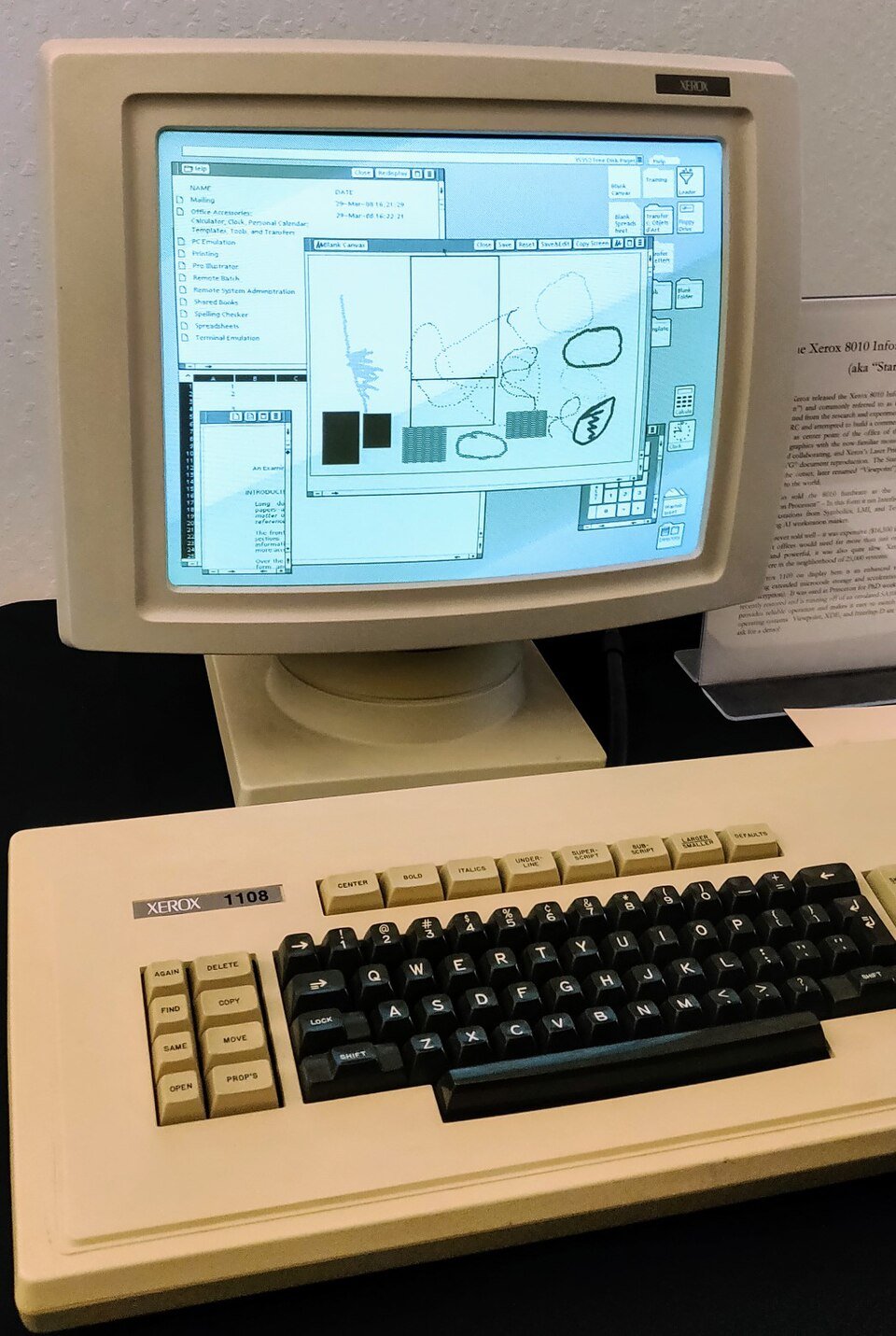

First to introduce a GUI; A luxury item priced at $16,595…

Author: Eric Chan from Hong Kong

Creative Commons Attribution 2.0

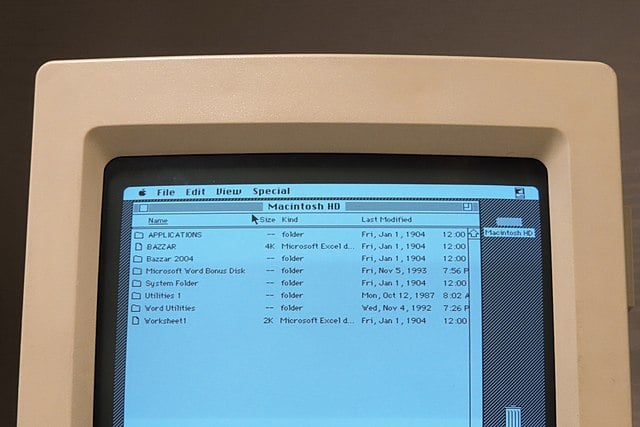

Mass Consumers, Creative Professionals, Education

Widespread adoption of GUI

DOS – Disk Operation System

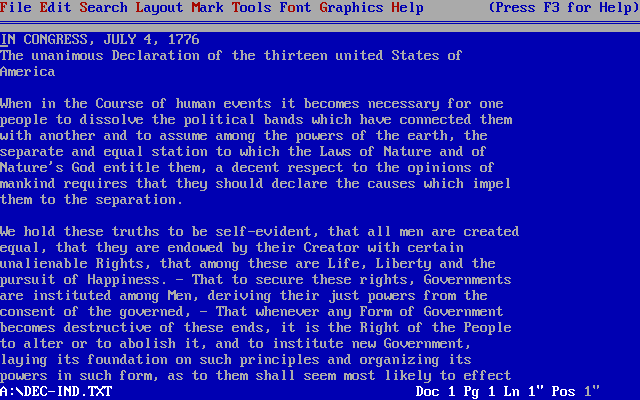

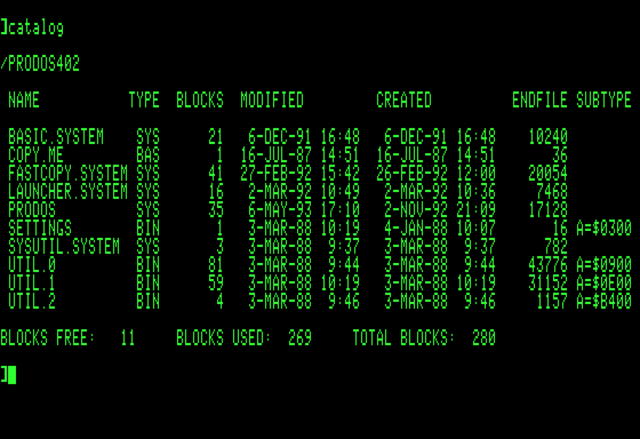

Third, let’s look at the early operating systems. Just like how people are “fine-tuning” models today, it was basically something only engineers could tinker with. Around 1978-79, only about ten thousand engineers in Silicon Valley were working with DOS systems, which were entirely command-line based with no graphical interface. At this stage, operating systems were far from penetrating the daily use of enterprises and the general public, much like AI models today, which are still in the hands of a group of tech geeks.

It wasn’t until 1981, with the launch of IBM’s first PC, that the DOS system gradually gained more attention, but it was still a command-line version without a GUI. Therefore, the computing scenarios at that time were very similar to AI today: they required a large number of tech geeks and engineers to repeatedly adjust and integrate to achieve specific applications. What truly brought PCs and operating systems to the enterprise level was the Xerox Star’s graphical user interface (GUI), which kicked off the first real wave of user expansion.

In 1984, the graphical interface system launched by Apple further expanded the user base to creative, educational, and other professional fields, slowly opening up the mass application of operating systems. However, during this period, DOS and GUI systems coexisted for a long time, with companies maintaining two separate systems to serve different needs.

The Early 1980s Application Ecosystem, What We Call “Killer Apps” Today

Lotus 1-2-3 – 1982

Author: Odacir Blanco | WordPerfect – 1985

License: Public Domain |

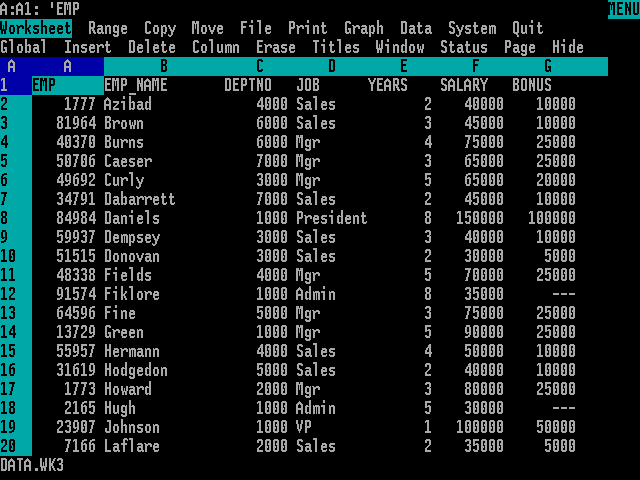

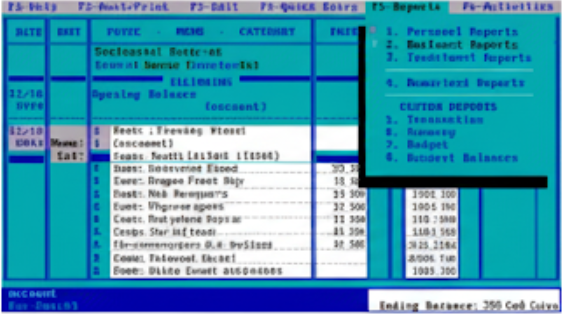

Fourth, the application ecosystem that gradually developed alongside the system and hardware capabilities! Here are some representative applications and a glimpse of their penetration path in the PC productivity revolution.

In these early UI systems, the market had not yet reached a consumer-grade scale and was mainly composed of productivity scenarios. Some applications began to stand out, such as Lotus 1-2-3, a famous financial management software and an early version of Excel. WordPerfect, released in 1985, was mainly used in the legal and academic fields. However, these editing operations were not done through a polished graphical interface but relied on the DOS command line. Knowledge workers needed to learn the relevant command-line operations to complete editing tasks.

In the academic research field, using PCs for document digitization and collaboration brought huge efficiency improvements. Therefore, by 1988, the penetration rate of PCs in academia was very high for scenarios like file transfer, email communication, and text editing. However, it wasn’t until 1989, with the enhancement of CPU computing power and GUI processing capabilities, that it began to have a major impact on industries like printing and advertising design. This is somewhat like today; although OpenAI has released a video world model, it has not been rapidly applied to practical scenarios because the maturation of computing resources and GUI technology takes time.

CorelDRAW – 1989

Graphic Designers, Printing Industry | Quicken – 1984

Personal Users, Small Businesses | Flight Simulator – 1985

Flight Enthusiasts, Students |

In the early days of a new computing platform, application innovation that delves deep into vertical scenarios still holds immense value for the industry. If we draw an analogy to the present, I believe that next year, when the TPU computing power in PCs is ready and Windows, as the standard intermediate operating system, can provide powerful AI computing power for upper-layer applications, a new batch of AI-related Copilot-like PC applications will emerge, running directly on the edge.

In this context, Quicken further deepened the experience in business scenarios based on Lotus. It improved the interaction interface and configurability of the original DOS system, developing deeply for the needs of financial management and small businesses. This gave these early applications a good space to survive.

However, the prices of these applications were quite high. For example, Lotus 1-2-3 was priced at nearly $500, which was a very expensive solution in 1985. This indicates that early productivity scenarios were mainly driven by consumers with strong purchasing power.

In addition, there were some games and simulators for enthusiasts, such as “Flight Simulator” on Windows, which provided more diverse and lightweight product features, attracting new users who liked to explore and experiment. Therefore, we can see that the early PC ecosystem was built by a combination of heavy-duty productivity tools, penetration into small and medium-sized enterprises, industrial and academic research, and some interesting breakout applications. However, the timeline for this process was very long because the underlying DOS and GUI technologies were developing relatively slowly.

Specifically, application vendors like Lotus played a key role. They were not operating system vendors; the latter focused on building the reliability, resource scheduling, and scalability of the system. In the 8- to 9-year window from 1982 to 1990, Lotus seized the opportunity to fill a market gap. Apple and Microsoft didn’t start releasing their complete Office suites until the 1990s, giving these system-level applications a market advantage of 7 to 8 years. They leveraged the popularity of the IBM PC and the DOS system to quickly enter the enterprise user, financial accounting, and other fields. These users had strong data processing needs, and the combination of the new computers and Lotus’s software achieved full penetration in these scenarios.

Windows 1.0 and Ballmer’s “Crazy” Sales Pitch

Returning to 1985, Lotus’s market share had already exceeded 50%. Faced with its high price of $495, it’s not hard to understand why Steve Ballmer, when promoting Windows 1.0, emphasized: “We offer a chess game, a spreadsheet, and image processing for just $99, not $500 or $600.” At that time, software pricing was a highly attractive selling point in marketing. When selling the operating system, specialized graphics software like CorelDRAW, somewhat similar to the later Photoshop, provided users with professional image processing functions.

Lotus 1-2-3

Company: Lotus Development Corporation

Background: Lotus 1-2-3 was developed by Lotus Development Corporation, founded by Mitch Kapor in 1982. Lotus 1-2-3 was the first software for the IBM PC to offer integrated spreadsheet, graphics, and database management functions, quickly becoming one of the most popular application software, especially among business and enterprise users.

User Profile: The main users were enterprise users, especially financial analysts, accountants, and managers. These users typically had a certain level of technical knowledge and were highly sensitive to data.

Main Use Cases: Used for data management, complex financial modeling, budgeting, report generation, and various other forms of data analysis work. The powerful features of Lotus 1-2-3 made it the top choice for spreadsheet use in enterprises.

1983: Lotus 1-2-3 was launched and quickly became the market leader, especially on IBM PC compatibles.

1985: Market share exceeded 50%, with a price of $495.

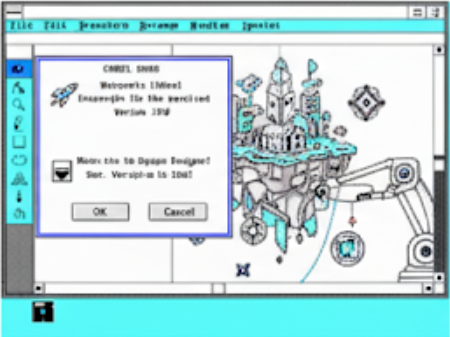

CorelDRAW

Company: Corel Corporation

Background: In the late 1980s, with the popularization of graphical user interfaces (GUIs) and personal computers (PCs), the graphic design and desktop publishing markets grew rapidly. Traditional design processes (manual drawing and typesetting) began to transition to digital.

User Profile: Had some understanding of computer graphic design, but were not necessarily technical experts.

- Professional designers and illustrators: Needed precise vector drawing tools to create illustrations, logos, and other design works.

- Desktop publishing (DTP) professionals: Needed to combine text and graphics to produce books, magazines, promotional materials, etc.

- Small and medium-sized businesses and freelancers: Used CorelDRAW to create business logos, advertisements, and marketing materials without expensive dedicated design hardware and software.

1989: CorelDRAW 1.0 was the first software to combine vector graphics design and desktop publishing functions, and its launch led a revolution in the graphic design field. This version supported features like multiple pages, curve editing, and word processing.

From the Acquired MS-DOS 1.0 to Windows + Office

| Time | Title | Details |

|---|---|---|

| 1981 | MS-DOS 1.0 | Confirmed partnership with IBM |

| 1982 | MS-DOS 1.25 | Licensed to third-party compatible brands |

| 1983 | MS-DOS 2.0 Microsoft Word | Enhanced system functionality Support for hard drives and directory structures |

| 1985 | Windows 1.0 | Added a graphical interface on top of Microsoft MS-DOS |

| 1987 | Windows 2.0 | Better graphics support and performance Overlapping windows and shortcut keys |

| 1988 | MS-DOS 4.0 | Introduced the graphical user interface DOS Shell |

| 1989 | Microsoft Office | Provided office automation integration for Windows |

Microsoft’s rise was perhaps not due to its initial products, but to its excellent business strategy. Early on, Microsoft showed keen business acumen by acquiring a third-party operating system called 86-DOS [yes, they bought it…]. This move made them an important partner for IBM. But surprisingly, Microsoft quickly expanded in the second year, cooperating with other hardware manufacturers, much like how after Tesla defined industry standards today, numerous companies followed suit, driving the entire ODM ecosystem and the establishment of AIPC standards.

After Microsoft defined the standard, hardware manufacturers began to act. Returning to today’s AI PC track and edge AI applications, we will see a large number of laptops with 40 TOPS of AI computing power coming to market, and Qualcomm is making similar moves. This brings new variables: on one hand, the hardware is upgraded, and on the other, the importance of the operating system in the middle layer is highlighted. The operating system needs to effectively allocate the 40 TOPS of computing resources to meet the needs of many upper-layer applications. Microsoft invested heavily in operating system development, leaving it no time to compete with Lotus or WordPerfect for a long time.

It wasn’t until the third year that Microsoft began to imitate WordPerfect [the system absorbs key applications], and this continued until 1989. For eight years, Microsoft solidified its third-party licensing coverage for the system and began selling Windows 1.0 independently in 1985. It’s worth noting that Windows 1.0 was released a full four years after Xerox’s GUI system, which shows the lengthy process of operating system development. Early Windows was mainly bundled with hardware devices, with sales reaching tens of thousands of units in the first two to three years, and a cumulative shipment of five to six million units within eight years.

The Productivity Revolution vs. Every Household

At that time, the main market for PCs was not limited to North America; developed countries in Europe also imported these devices by sea. The user base was mainly concentrated in heavy productivity scenarios. It wasn’t until 1989, when applications like image processing began to emerge, that new use cases were driven. Even with the launch of GUI systems, they did not immediately enter the mass consumer market. The real entry into ordinary households occurred around 1994, with the rise of the Netscape browser and the internet, when more and more people who used computers at work began to purchase devices for their homes.

This technological evolution path, from a productivity revolution to a consumer explosion, is clearly visible in the PC era. Today, information spreads rapidly, and whether AI can empower every consumer scenario still needs time to be verified. In the early stages, we may need to pay more attention to changes on the production and supply side.

Another key factor is the evolution of human-computer interaction. The introduction of the mouse created a new mode of human-computer interaction, which greatly influenced the penetration of PCs. Similarly, we can reflect on the current structure by looking at Microsoft’s development trajectory. If today’s OpenAI is validating the possibility of an AI operating system in the cloud, then on the edge, without the support of an operating system, upper-layer applications will struggle to grow. When the operating system and hardware achieve key breakthroughs, downstream applications may experience explosive growth.

Today, we interact through natural language and video streams, and these new variables will also affect the application scenarios of AI. To summarize briefly, the reason Microsoft took from 1981 to 1989 to develop DOS and GUI in parallel was that they needed to be compatible with a large number of hardware devices. This also explains why Steve Jobs once looked down on the Windows system, considering it complex and unaesthetic. However, from a business perspective, Microsoft took steady steps: from acquiring code and launching a GUI to releasing Office eight years after Lotus, they consolidated their ecosystem position in every way.

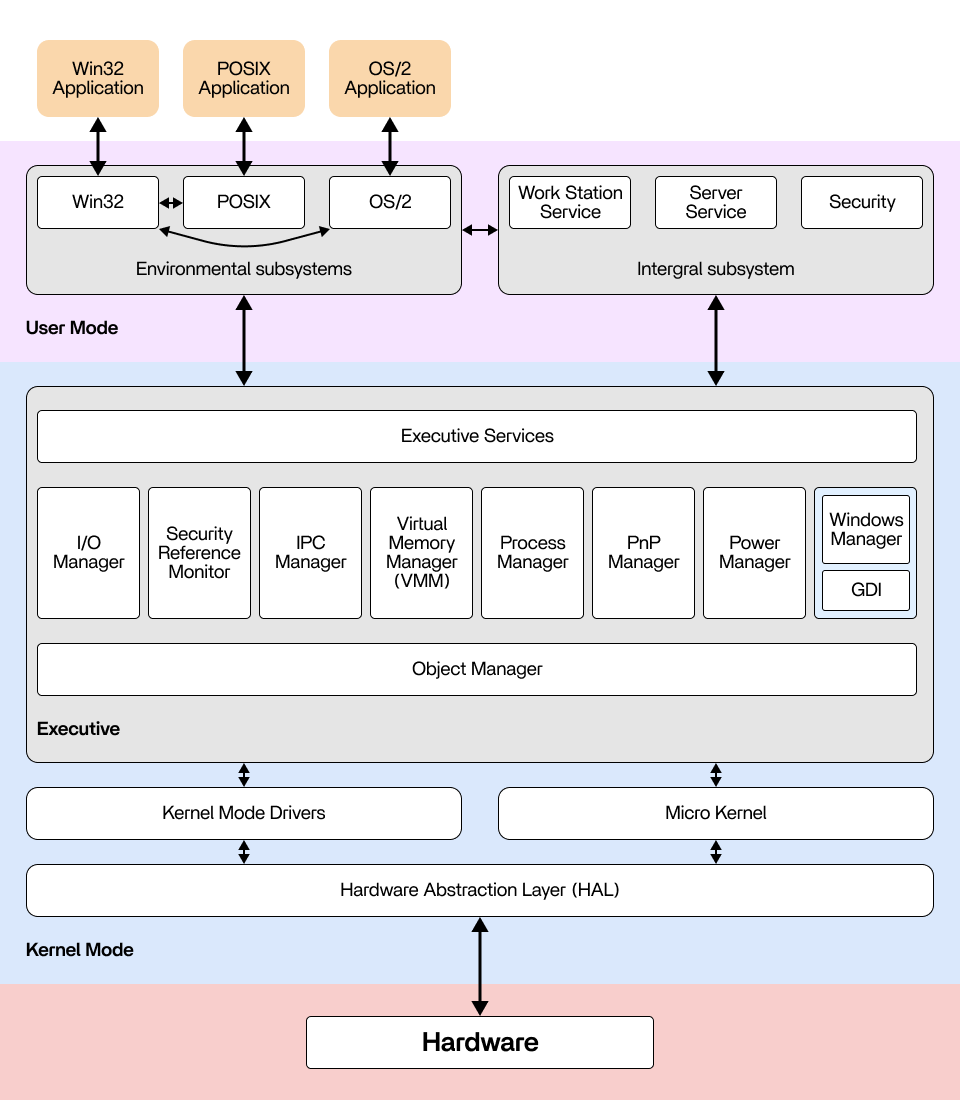

A Glimpse into the Current Windows Architecture Through the Lens of Windows NT

Windows NT Architecture Diagram with components translated |

|

Summary of the Four Elements – Seeing the Variables and Constant Demands

Chips, System, Applications, and Devices

Storage/Compute

Driver | System

Foundation | Application

User Value | Product

Vehicle |

In this process, several key elements are worth noting. First is the evolution of storage and computing units. Although the costs of early chips and storage decreased, they did not drop significantly, which is related to the advancement of Moore’s Law. Today, the deployment of edge computing is also because technological development has reached a certain tipping point.

Second, the operating system, as an important middleware, undertakes key tasks such as resource management and device adaptation. Although early systems were not powerful, their importance was self-evident.

Third, early killer applications could make money, but if they were not developed deeply, they could eventually be replaced [what is now often called vertical scenarios, requiring depth]. Whether application vendors can sink to the operating system layer is still a question worth pondering.

Ultimately, value is captured by a commercial vehicle. In the early days, people bought hardware as the vehicle, but with the establishment of system platforms, the importance of hardware relatively decreased. In the era where “platform is king,” the operating system not only shared the value but also nurtured a rich application ecosystem. This phenomenon was also verified in the mobile internet era.

We can map these four elements—hardware, operating system, applications, and human-computer interaction—to the current development of AI. On the supply side, we should think about why people need computers and why they need AI models. The unchanging demand is for efficient and convenient storage and editing of information. Every generation of computing devices pursues more natural and easier human-computer interaction, which is an eternal theme.

Finally, the dissemination and sharing of information are also important factors driving technological development. From early email to later browsers, the evolution of dissemination methods has met people’s deep-seated needs for digitization. Today, we generally believe we are in a wave of intelligentization, just like the past information revolution, and we can use historical patterns to draw analogies and think about future directions.

- Technology Foundation Layer (Key to Storage/Compute): The development of core hardware technologies such as processors (computing power) and storage (storage media).

- Platform Layer: The basic platform of the PC, providing interfaces with hardware and a running environment for upper-layer applications.

- Application Layer: Application software is the main motivation for users to buy PCs and is an important factor in attracting users to a certain platform.

- Transaction Vehicle: Hardware products are the physical devices that end-users purchase, available for users to choose and buy.

Demand – Digitization:

- Retention: A convenient medium for permanently storing information.

- Production: The constant need for efficiency in processing text, data, images, and information in productivity scenarios.

- Dissemination: The efficiency of collaboration.

Key Events and Trends After 1990

| Year | Event | Description |

|---|---|---|

| 1993 | Intel Pentium processor released | Significantly improved CPU performance and efficiency |

| 1998 | Windows 98 and USB 1.1 standard | Made external devices plug-and-play |

| 2000 | Intel Pentium 4 | High-performance desktop mainframe |

| 2003 | Explosion of Internet applications | MySpace and Facebook, Amazon and eBay |

| 2005 | Laptop sales surpass desktop computers for the first time | Intel Centrino platform, integrated with low-power processors |

| 2007 | The rise of netbooks | Netbooks based on Intel Atom processors appear |

| 2011 | Ultrabook | Ultrabook concept, laptop vs tablet |

| 2018 | Smartphone | Replaced other devices to become the main mobile computing |

The table above clearly shows some very interesting information! Entering the 1990s, we welcomed the release of the Intel Pentium processor, the explosion of internet applications, the birth of Windows 98, and the emergence of USB 1.1, netbooks, and ultrabooks. This series of technological innovations outlines the unchanging trend in the development of computers — the internet truly entered every household.

During this period, CPUs became further lightweighted, and the advent of USB 1.1 made the expansion of peripherals more convenient, making it easy to connect devices like mice. The rise of the internet led to a large number of consumers starting to use personal computing devices. It is worth noting that the development of the PC shows a clear trend: lightweighting and portability. An early microcosm of the mobile phone was the PDA.

Desktop Computer – 2000

Notebook – 2005

Ultrabook – 2012

The images above are AI-generated simulations

The PDA revolution of the 1990s offers an interesting perspective. While time is limited, we won’t delve into it here. However, a review of this trajectory may offer some key analogies for the future iteration path of AI PCs or AI NAS.

I’ve discussed this with colleagues at Lenovo. Their early market penetration already involved browsers. In 2000, Lenovo launched a program that made dial-up internet access easier, streamlining network setup and connection, enabling more users to access the internet. This helped them quickly capture the market. Then, the era of branded computers arrived.

One constant in the evolution of PCs is the shift toward portability and thinness, enabling individuals to access the digital world anytime, anywhere. Another trend is the shift from early heavy production to multi-scenario penetration. So, in which vertical industries will AI initially focus? When will it achieve widespread adoption? This is closely related to the underlying computing power, device form factor, and operating system maturity—these are all interconnected. We see that the latter half of the PC era embodies this multi-scenario penetration.

Today, new variables like GPUs, TPUs, and RISC-V’s built-in NPU are driving system evolution, and these system changes will permeate the application layer. When the time is right, many interesting AI-native applications will emerge, making local Copilot even more powerful. However, there are many key elements in the industry chain, requiring in-depth consideration and observation of changes in key players.

Changing Factors, Unchanging Trends

- 1. Portability: From heavy to portable, lower power consumption, and lighter devices – significantly reducing the cost of entry into the digital world.

- 2. Multi-scenario: Gaming, drawing, programming, and related peripherals – significantly expanding the boundaries of digital applications.

What is Key to Establishing a New Category? Specialized Devices vs. General-Purpose Computing Devices

In this process, I realized an interesting question: How do today’s multi-form AI hardware compare to the PC development of the past? Which device innovations will be swallowed by the PC, and which will not? The PC was so dominant then, just as smartphones, laptops, and cloud computing are today. So, in which scenarios did a divergence between specialized and general-purpose devices occur, ultimately not being replaced by a single, unified device?

I found that the game console launched by Nintendo in 1983 actually used the same chip as the Apple I and II, but it became a specialized device. To this day, buying a PS5 or Xbox follows the same logic. Therefore, when a vertical scenario has sufficient depth in computing needs, system requirements, and application scenarios, it can form an independent category of specialized devices. The PDA from 1999 is another example. It used relatively outdated, low-power devices to meet the need for a personal digital assistant. Although the PDA at that time was not yet a phone, just a low-cost tool for scheduling and contact management, it was much cheaper than a PC and occupied a small ecosystem of portable devices, which can be seen as a predecessor to the mobile phone. But it was not completely replaced by later laptops; instead, the development of mobile phones surpassed it.

Between 1980 and 2000, did a single, unified computing device emerge in the computer industry? The keyword is “scene depth.”

The boundary between specialized and general-purpose devices inspires us to think: which of today’s AI smart hardware will be swallowed by AI phones, and which will independently diverge into new categories like AI toys? In terms of scene depth and asset investment, we can use game consoles and PDAs as analogies for in-depth thinking.

As an aside, the early 8-bit processors had computing performance that was no match for today’s ARM processors; they were comparable to the display controller in your home refrigerator or microwave. A computer from 1980 was essentially at the computing level of your home refrigerator. The point is: looking back, it wasn’t as powerful as people might imagine, yet it laid the foundation for the entire PC industry and the development of the internet.

| Comparison Dimension | PDA | PC in 1999 |

|---|---|---|

| Computing Power | Low-performance processor (e.g., Motorola DragonBall 16 MHz), 2-16 MB RAM, limited storage space; weak graphics and multimedia processing capabilities. | High-performance processor (e.g., Intel Pentium III 500 MHz); 64-256 MB RAM, 10-20 GB hard drive capacity; powerful graphics and multimedia processing capabilities. |

| Cost | Price range: $200-$600; Mainly for Personal Information Management (PIM), high cost-effectiveness. | Price range: $1000-$2000; Provides comprehensive computing functions, wide range of applications, high cost-effectiveness. |

| Power Consumption | Low-power design, battery-powered; Long battery life, power consumption from a few hundred milliwatts to a few watts. | High power consumption, typically 100-300 watts; Requires continuous power supply, poor portability. |

| Application Scenarios | Schedule management, contact management, task lists; Simple text processing, notes, email; emphasizes portability and immediacy. | Office work (word processing, spreadsheets); Entertainment (games, music, movies); Internet browsing and communication, software development, graphic design, etc. |

| Portability | Small size, light weight; easy to carry and use anytime, anywhere. | Large size, heavy weight; for use in a fixed location, not easy to carry. |

Today’s AI PC, Applications, and New Opportunities

Returning to the present, although the elements of the industrial chain have changed, what remains unchanged is people’s demand for data retention, production, and dissemination. At an abstract level, people’s needs are shifting from GUI operations to needing a competitor or an intelligent agent to automatically complete code or tasks. What remains constant should be the need to acquire and store information. With the implementation of Copilot, creators can input some context and let the machine help them get creative scripts or understand what their peers are doing.

For example, a company can use an agent to track all relevant industry innovations in real-time and automatically generate weekly reports. These ways of retaining and acquiring production data will become smarter and more intelligent. And the carrier for this will definitely be different from a traditional PC; it will be an always-on, real-time computing device. In the past, people needed to use a mouse and GUI to be productive; but when intelligence is directly embedded into the computing device, it can act independently. This means that human-computer interaction no longer needs to rely on a mouse and a display screen. You can send it a task, and it can complete it directly.

And the process of achieving all this reveals a pattern that can be seen in the microcosm of the past 40 years. Therefore, these underlying scene demands have consistency! The new productivity driven by GPT will still be dominated by productivity scenarios in the early stages, just like Lotus 1-2-3 in the DOS era! We can build on this foundation, add new production variables, and find possible early application scenarios. Combined with the previously mentioned gaming industry, image processing industry, and methods of producing, acquiring, and disseminating data, we can theoretically explore all possibilities.

Retention: Machine acquires information, provides personalized recommendations.

Production: Models participate in decision-making and assist in the production process.

Dissemination: Machine automatically handles distribution and dissemination.

New Production Factors

Now, we can see four new production factors beginning to emerge: the development of GPUs and TPUs, new operating system models, data privatization, and the amount of unique user data held. When these factors are combined, we may witness the birth of a brand-new “compute-and-storage-integrated” computing device. Its position is different from that of mobile phones, laptops, and even the public cloud. I will try to list its characteristics clearly in a table.

Private Data | Large Models | GPU/TPU Compute Power | Applications |

|

|

|

|

Author: Brian Kerrigan |

- Private Data: High-quality proprietary data resources within an organization or privately acquired data by machines are key assets for training and optimizing AI models.

- Large Model Capability: The ability to understand, generate, and reason, adaptable to various tasks and scenarios.

- GPU or ASIC Compute Power: Specialized high-performance hardware for inference.

- AI Applications: New applications based on LLMs that are integrated into various scenarios.

Scenarios and Carriers – One Table

| Comparison | Mobile Phone | Private Cloud | Public Cloud |

|---|---|---|---|

| AI Application | Lightweight, Copilot | Private Inference Capability, Agent | OpenAI, Agent |

| Large Model Capability | 3B | 7B – 100B | 405B |

| Computing Performance | Mobile Chip, Low Power 6W 20 TOPS | GPU / ASIC, Medium-High Performance 200W 200 TOPS | High-Performance Cluster, Elastic Scaling |

| Operating System | Android, iOS Runtime Execution Full Data Access | Private Cloud OS Real-time Task Execution Full Data Access | Cloud Platform-Specific System Real-time Task Execution Partial Authorization |

| Data Storage | 2TB | Scalable Capacity, Hundreds of TBs | Scalable Capacity |

| Battery Life | Battery Pack 12 Hours | Plugged-in ♾️ | Plugged-in ♾️ |

Due to battery life limitations, we find that computing is becoming increasingly lightweight, which has led to today’s mobile phones and laptops. Therefore, the trajectory of technological development has always been towards portability and collaboration, which are long-term demands of people. Just like the development of e-commerce, people pursue higher-quality brands and lightweight experiences, wanting more portable batteries and phones. However, computing power and battery life are constrained by energy and power consumption limits, which restricts the intelligence level of models that can run on devices, currently typically at the 3B parameter level.

This means that when Windows or the next-generation Android system is ready, they will likely be based on 3B-level models and Copilot, inspiring a new generation of AI applications, such as AI-driven browsers, email reply tools, etc. The space for these applications is limited, but they will still be very interesting because they can only run 3B-level models behind the scenes. This is a stage that mobile phones and laptops will inevitably go through because, from a silicon process perspective, the AI computing power per watt will not change dramatically quickly.

On the other hand, there is pure cloud computing. But the problem with the cloud is: are you willing to hand over your data from platforms like Notion, Slack, and Lark to a cloud vendor? Or are you willing to give a single cloud service provider full access to your Taobao, WeChat, and financial accounts? This obviously comes with a huge psychological decision-making cost. Therefore, the cloud will exist at the highest level, providing the most intelligent model capabilities through API calls, penetrating and covering large enterprises.

But in the middle, an opportunity to build a new operating system has emerged. This operating system will act as a carrier for an intelligent agent, running on a device that is powered on 24 hours a day. You can send it tasks from your phone or laptop, and it will execute them automatically in the background. It has a huge data storage capacity, and because there are no computing power constraints, it can be equipped with a hundred-watt-level GPU, providing about 200 TOPS of AI computing power. The iteration of TPUs and NPUs will further reduce the cost of computing power, similar to the evolution of the early 8088 chip.

On this basis, a real-time, sufficiently intelligent model can be built to serve everyone. Mapped to the present, these are the 7B to 100B level large models that everyone is releasing, which, after quantization, can run entirely on a 200 TOPS computing architecture. If there is suitable operating system support, a rich ecosystem of intelligent agent applications will emerge. These system-level models are finely tuned, what we often call edge models. Although the industrial chain has many elements, this new device has a clear positioning. Just like the laptop you buy, you can log into various accounts without worrying too much about data security issues, because it is your personal computing device. It is smart enough to serve you 24 hours a day.

Creators, Engineers, and Knowledge Workers

Creators |  Freelancers |  Coders |

Setting aside front-end innovations like glasses and headphones, on the back end, it is highly likely that a personal computing device will emerge, transitioning from productivity to consumer use. This is a device that shifts from pure computing to being compute-and-storage-integrated. Today, data mobility and collaboration are enhanced, while the demand for computing power is also increasing. A compute-and-storage-integrated device becomes a necessary carrier for a personal intelligent agent.

Initially, such devices may focus on groups like creators, engineers, and knowledge workers to enter the market. They typically have a large amount of rich media data and asset management needs and require productivity tools to meet their pain points in storage and collaboration. This is similar to the penetration path of early PCs, targeting users who are willing to pay and have a strong demand for productivity, thereby entering this new battlefield.

ZimaCube – The Creator’s Private Cloud

We recently conducted further interviews with numerous creators and content professionals, uncovering a wider range of application scenarios. In fact, this category has a very long pipeline. ZimaCube’s approach is more akin to Apple’s vertical integration, and we need to rethink how to proceed at different stages. Currently, NAS (Network Attached Storage) serves as a carrier for AI. It has its own iterative process. Within this process, we are achieving commercialization through vertical integration of creators’ private cloud solutions.

|  |

Hardware is not the barrier, but the starting point; it needs a certain uniqueness. | System and applications serve the scenario. |

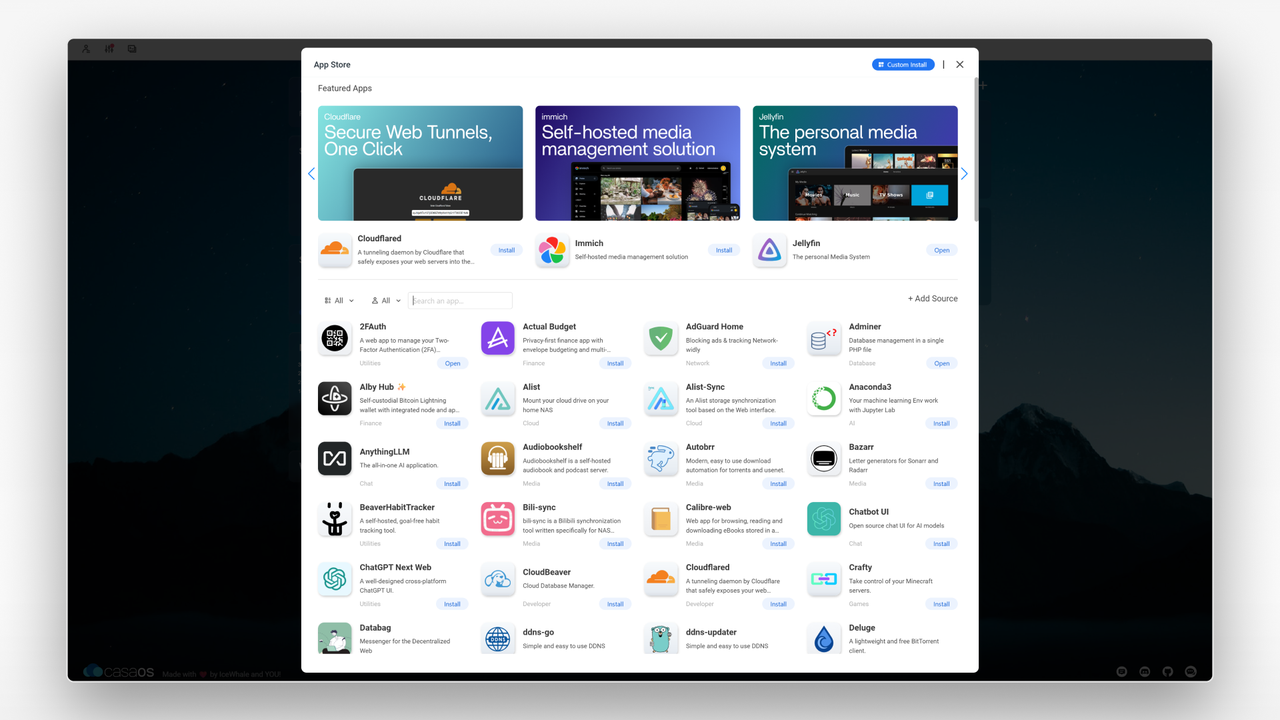

Hardware is the starting point; it begins with hardware, but applications are where the scenario value lies. An open application ecosystem can help us absorb various emerging applications like Lotus 1-2-3 earlier. We don’t need to rush to invest a lot of resources in developing applications; instead, we should build a platform and promote it through community-based operations.

System and Third-Party Applications

Remain open, incorporate mainstream applications from the LocalLLM community, and build an App Store with documentation and unique application standards.

The Necessity of Combining Systems and Communities in a Global Business Context

However, hybrid hardware and software products are indeed difficult to create. In today’s China, many innovative companies require dual capabilities. In terms of organizational capabilities, on the one hand, they need to follow a “waterfall” approach to hardware management and production processes to control hardware costs and risks; on the other hand, they need to build an agile, iterative logic to update software systems on a weekly or monthly basis.

Communities can be an excellent vehicle for feeding global user needs and feedback back into our software systems. Hardware itself may not require frequent updates. If you sell a power bank, Amazon’s ratings and waterfall management can complete the product definition and a one-year sales cycle. But today, there are few niches for creative companies that rely solely on hardware supply. Most categories that rely on economies of scale are dominated by giants, and there are no new traffic structures that can quickly expand the market.

A Universal Challenge: A Call for the Next Generation of Platform Builders

History tells us that every computing era is ultimately defined by one or a few dominant platforms. Today, building this new platform is a shared opportunity and challenge for all innovators globally. This requires an unprecedented and comprehensive capability that transcends boundaries:

Deep Integration of Hardware and Software: This requires the perfect fusion of the “waterfall” rigor of hardware development with the “agile” iteration of software. Successful innovation is no longer about just hardware or software, but about a seamlessly integrated “Hybrid Product.”

Co-building Ecosystems and Communities: Just as the Homebrew Computer Club sparked the PC revolution, today’s open-source communities (like LocalLLM) are the cradles for the next generation of “killer apps.” A closed system might win for a moment, but only an open ecosystem can win the future.

Therefore, the ultimate lesson from the 1980s is not about geography, but about vision. The victors of that era won not because they were in Silicon Valley, but because they successfully integrated chips, systems, and applications into a platform that empowered people and ushered in a new age.

Today, the stage is set. For entrepreneurs and investors worldwide, the real question isn’t “where” to innovate, but “how” to effectively organize the new factors of production—private data, AI models, and accessible computing power—into a new, human-centric platform that unleashes creativity. This is not a solo performance by any single country or region, but a global endeavor that concerns us all, aimed at reshaping the future of computing.

It all started with an observation: in the next decade, could every household have a small private cloud device? This could serve as a data asset manager for individuals and small organizations, the hub of a smart home, or even a local AI-powered Jarvis. You might call it a NAS, a home server, or something else, but we believe what truly matters is granting everyone the right to their own cloud.